Low code scraper platform

The Low Code Scraper Platform was a project I led the development effort on at MakeBetter Consulting. The goal was to build a configurable web scraping platform for internal projects, allowing non-technical team members to easily set up and execute web scraping tasks.

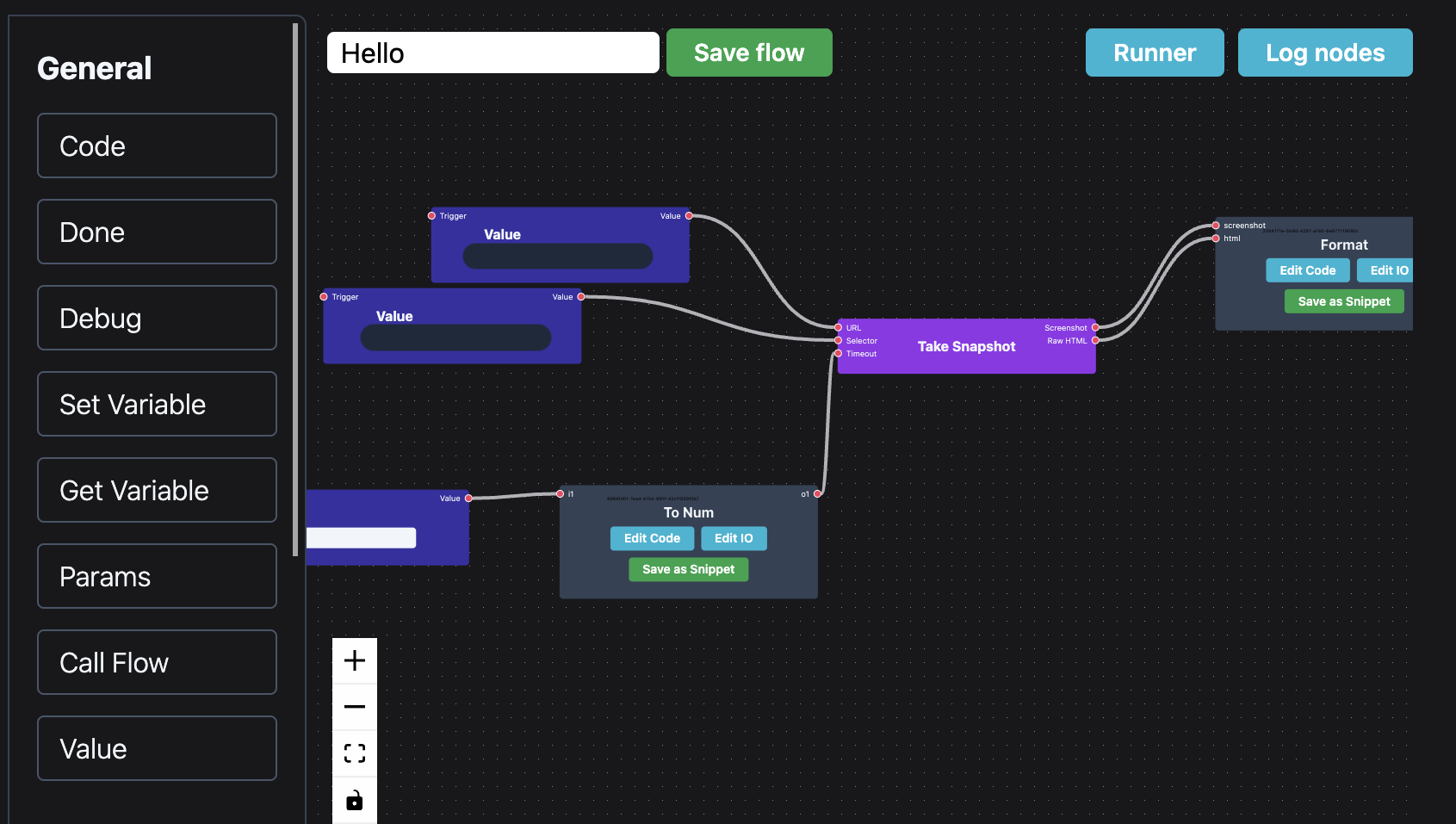

The platform was built using React Flow in the frontend, creating a flowchart-like interface for users to set up their scraping process. Blocks included taking screenshots, downloading and storing images, fetching raw HTML/JSON, and organizing data into logical chunks for later processing. Additionally, there were blocks for control flow, variables, executing sandboxed JavaScript, and calling other flows, making the platform Turing complete. Once a flowchart was created, it would be serialized into JSON and sent to the backend for execution.

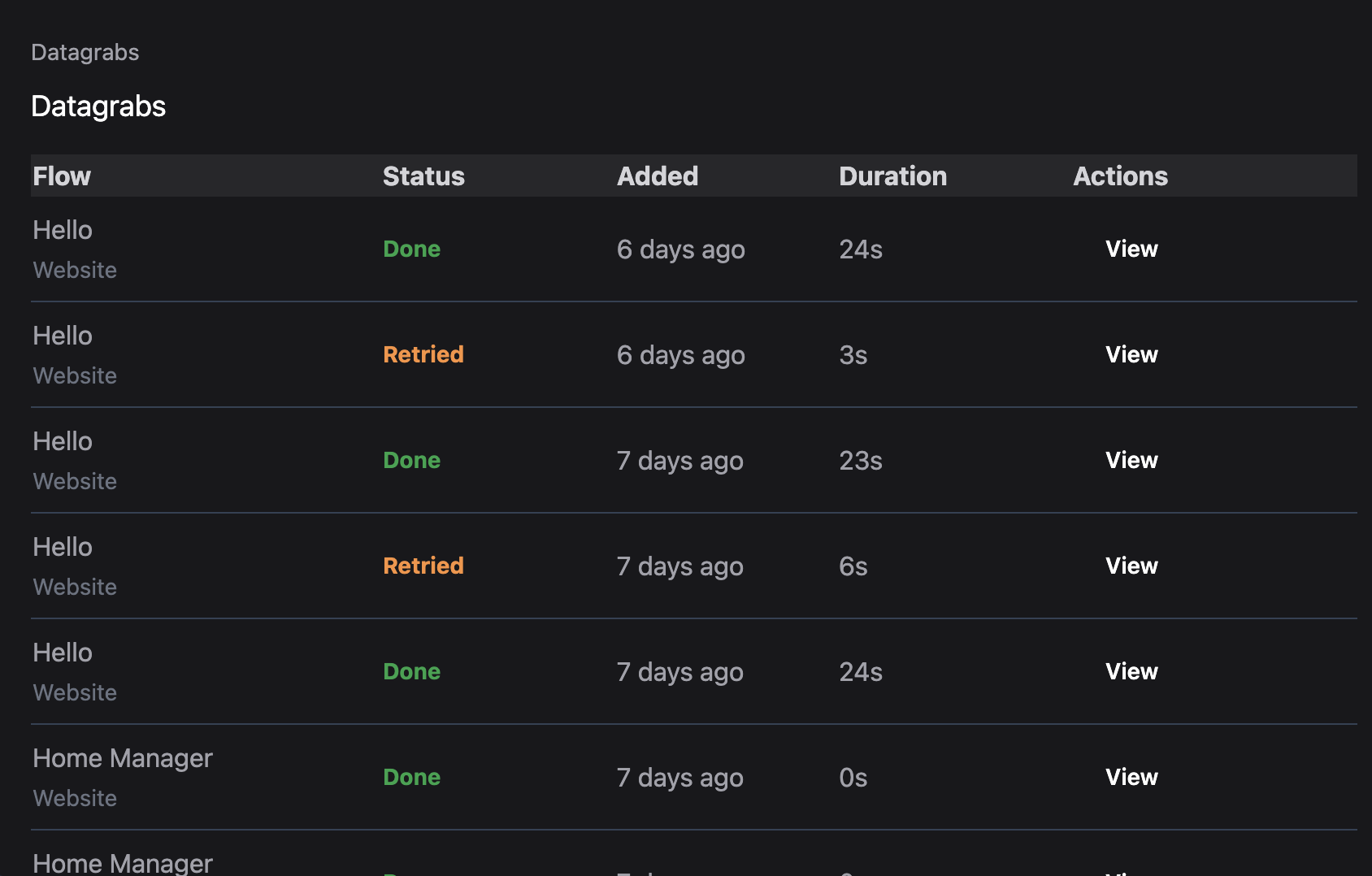

The backend was built using an event-driven execution environment that would facilitate running the flows. When triggered, flows were added to a queue and picked up by a Docker container for execution. Progress was communicated to the user through a websocket and any asynchronous sub-flows were added to the main queue once the job was done.

Any computationally expensive tasks, such as launching Chromium to take a screenshot, were added to a separate queue that ran on beefier VMs.

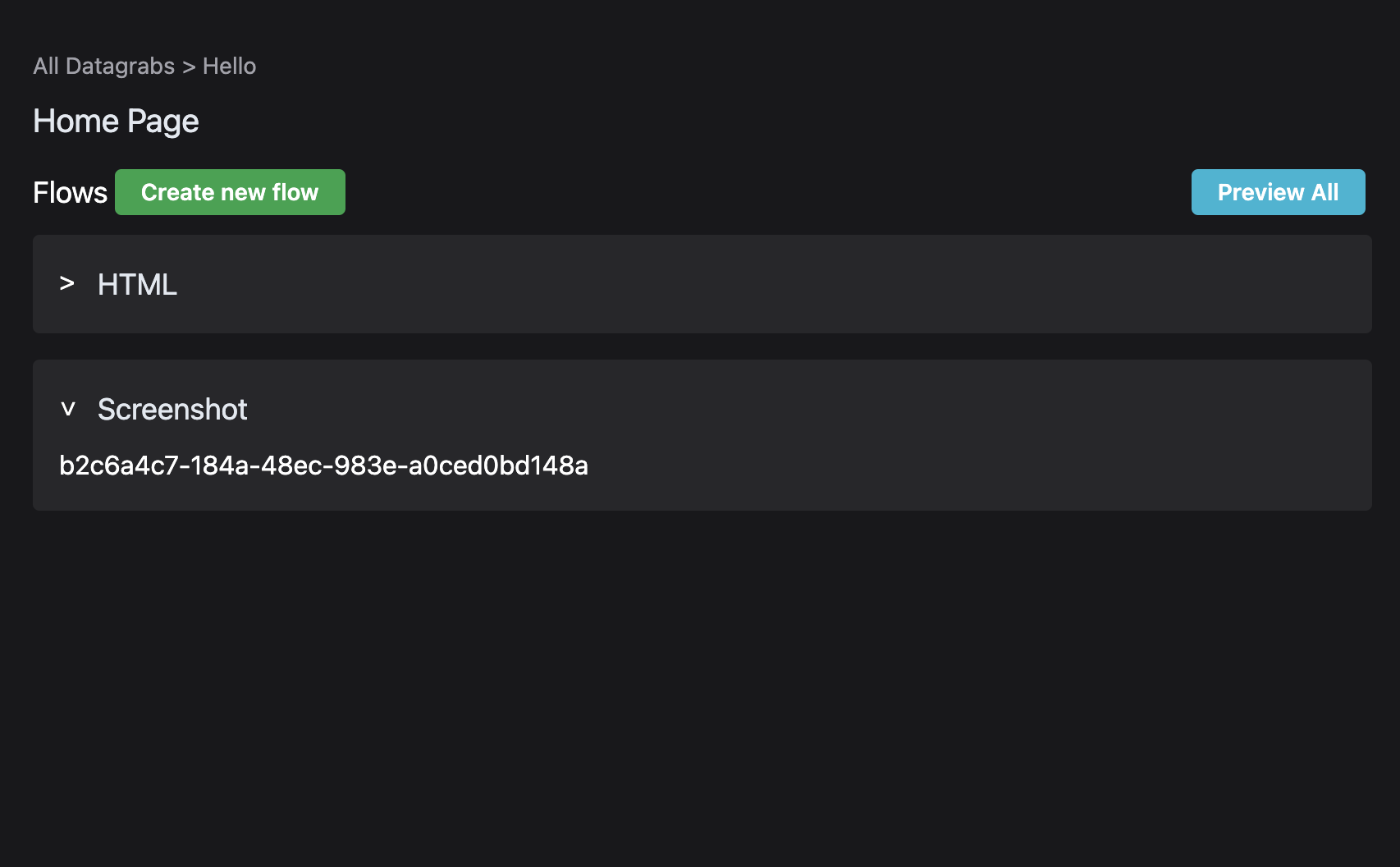

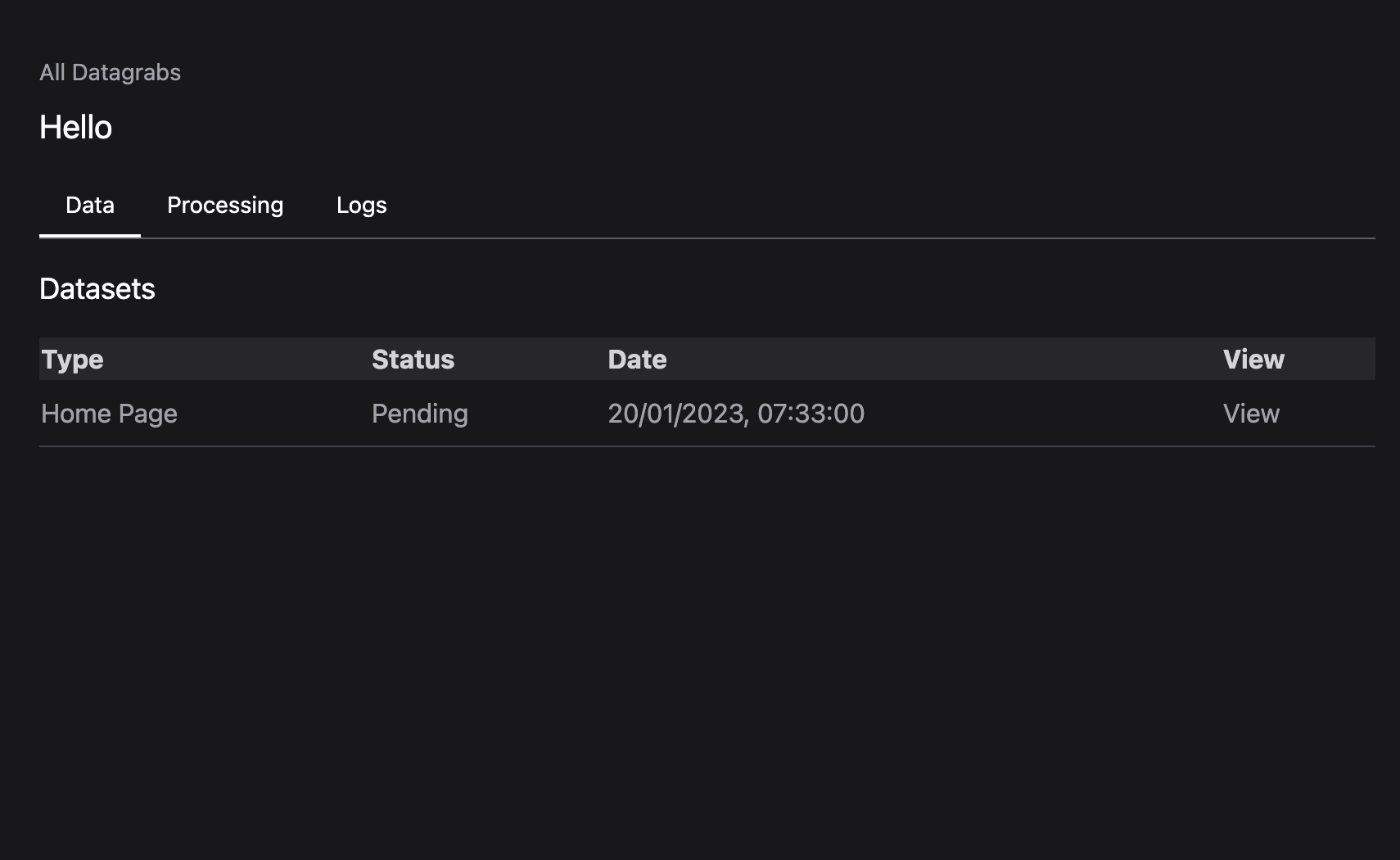

Finally, accumulated data was stored in the database in the format that the user defined in the flow.